Deploy your TensorFlow models to mobile instantly

Pallet turns your computer vision models into shareable apps so you can quickly make real-world predictions and spend more time on model improvements than on hosting & deployment.

Pallet turns your computer vision models into shareable apps so you can quickly make real-world predictions and spend more time on model improvements than on hosting & deployment.

You've already spent time building and training your model. Skip the hosting, scaling, and boilerplate integrations:

Pallet works with any image classification model made with TensorFlow.

Develop the model manually , or use an AutoML tool like Lobe, Google Cloud Vision, or Teachable Machine.

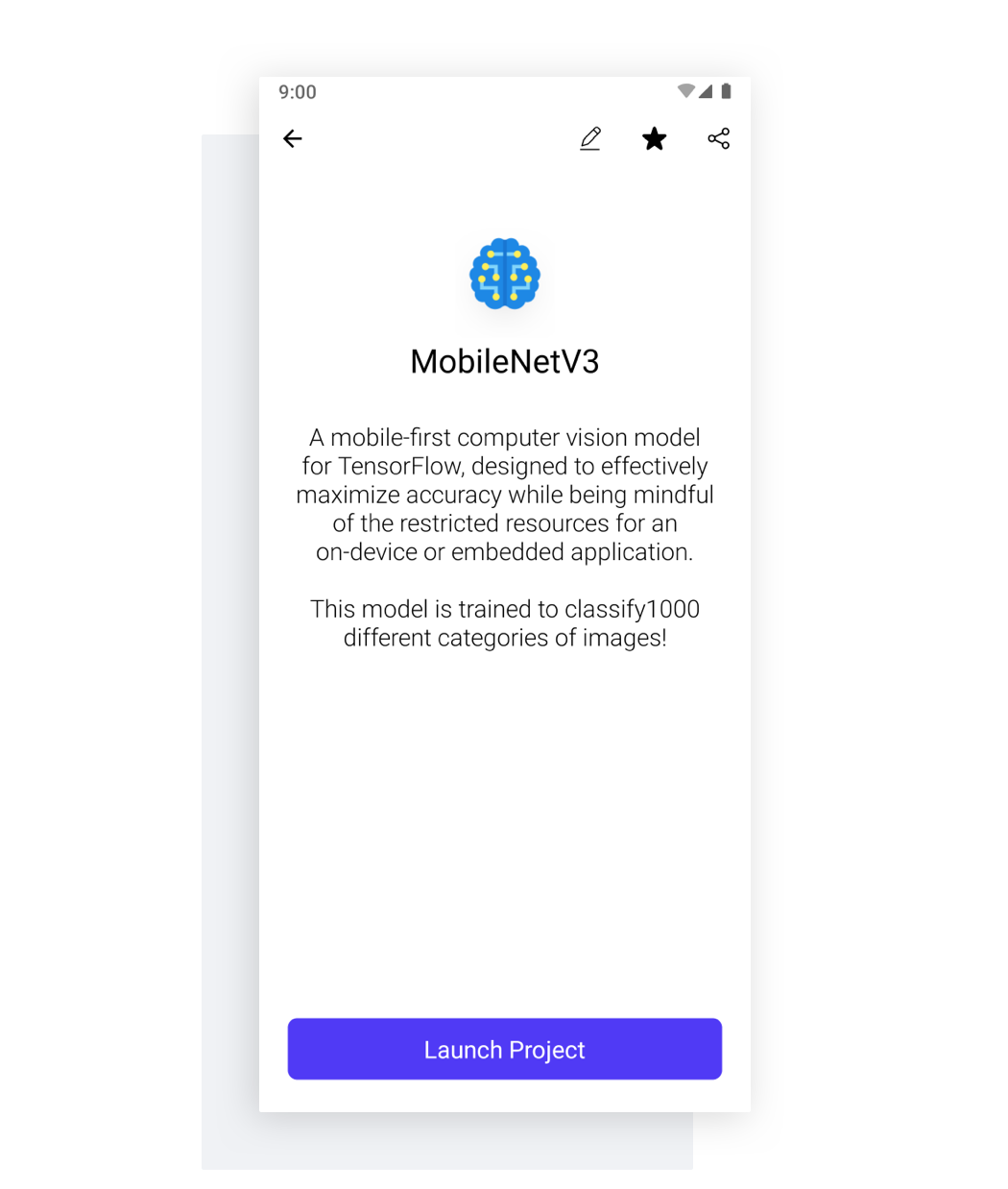

Just drop your TensorFlow Lite model into the web console to deploy it.

Pallet takes care of hosting and serving your model through a mobile app - no code necessary.

Pallet automatically detects the shape and type of your model to create a preprocessing pipeline.

Easily customize this pipeline with resizing, inversion, grayscaling, and other operations to suit your model and maximize accuracy.

Immediately use your model to make predictions in real-time or save predictions for later review.

With on-device inference, you can evaluate your model with zero latency, while keeping your data private & secure.

Quickly learn how your model performs in the wild, running on a CPU, GPU, or with the Neural Network API.

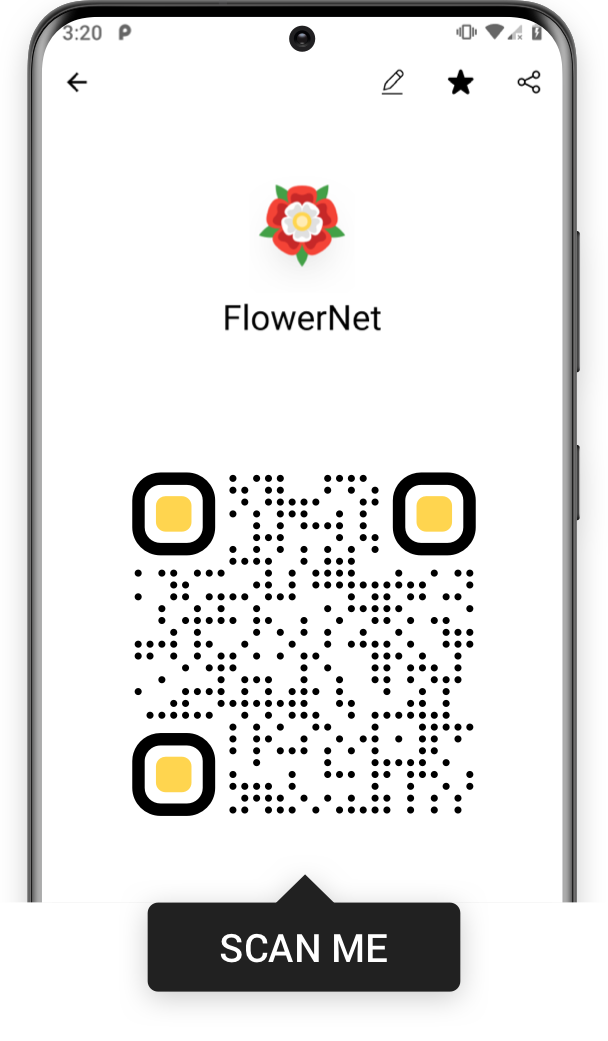

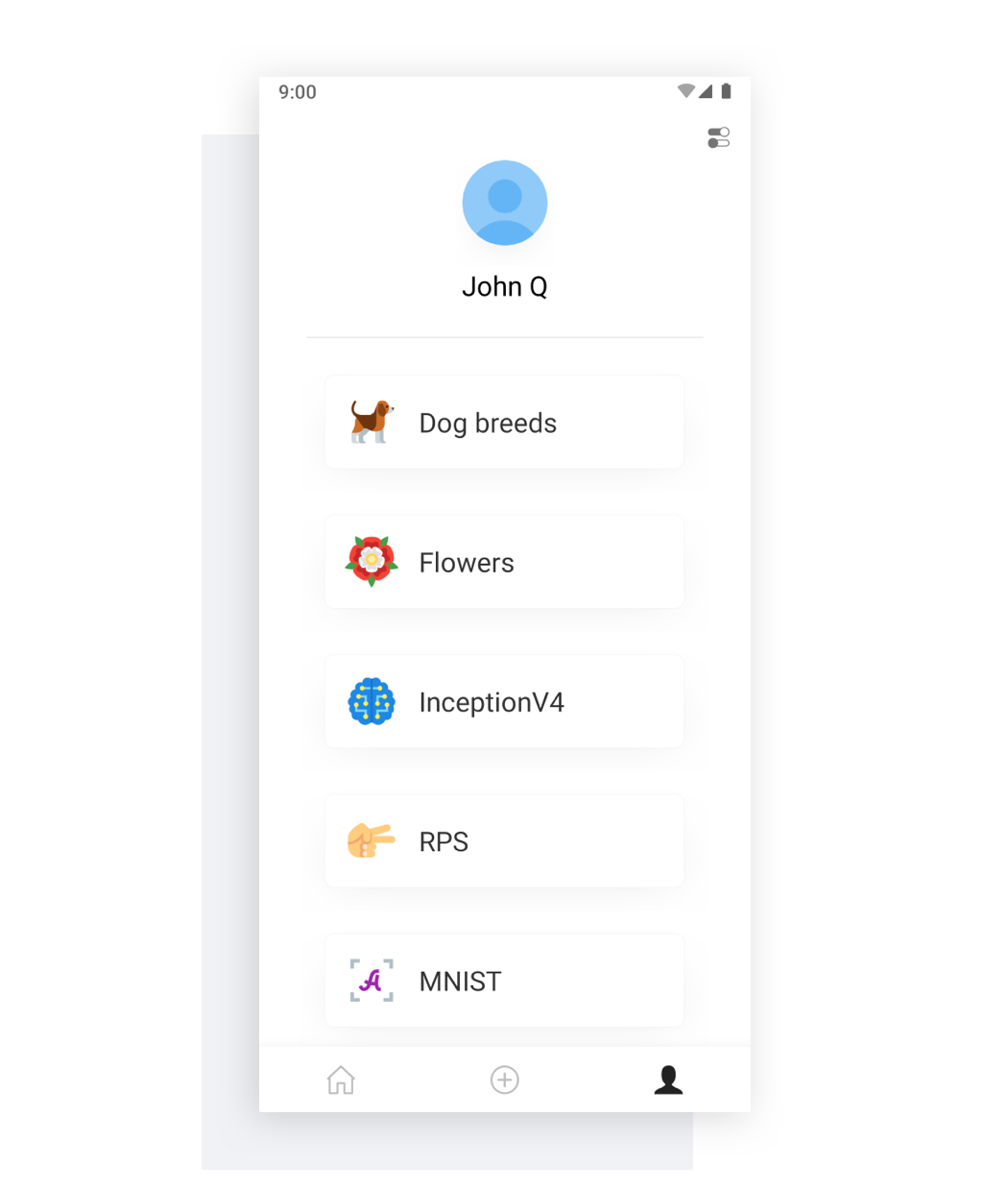

Send your model to any number of friends and colleagues in just one tap.

A unique link is generated for each model that you deploy and opens directly to your model when activated.

Scan the QR code to try FlowerNet now →

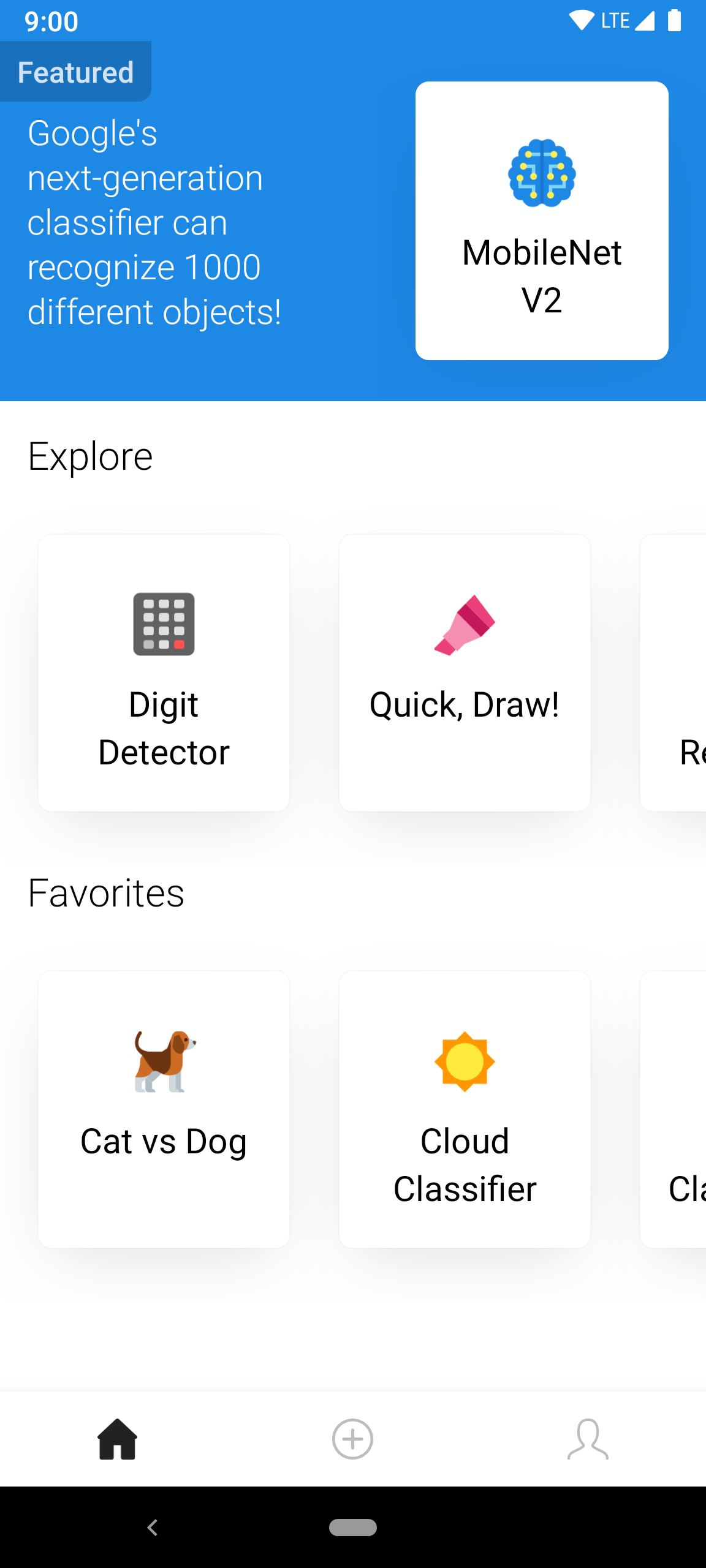

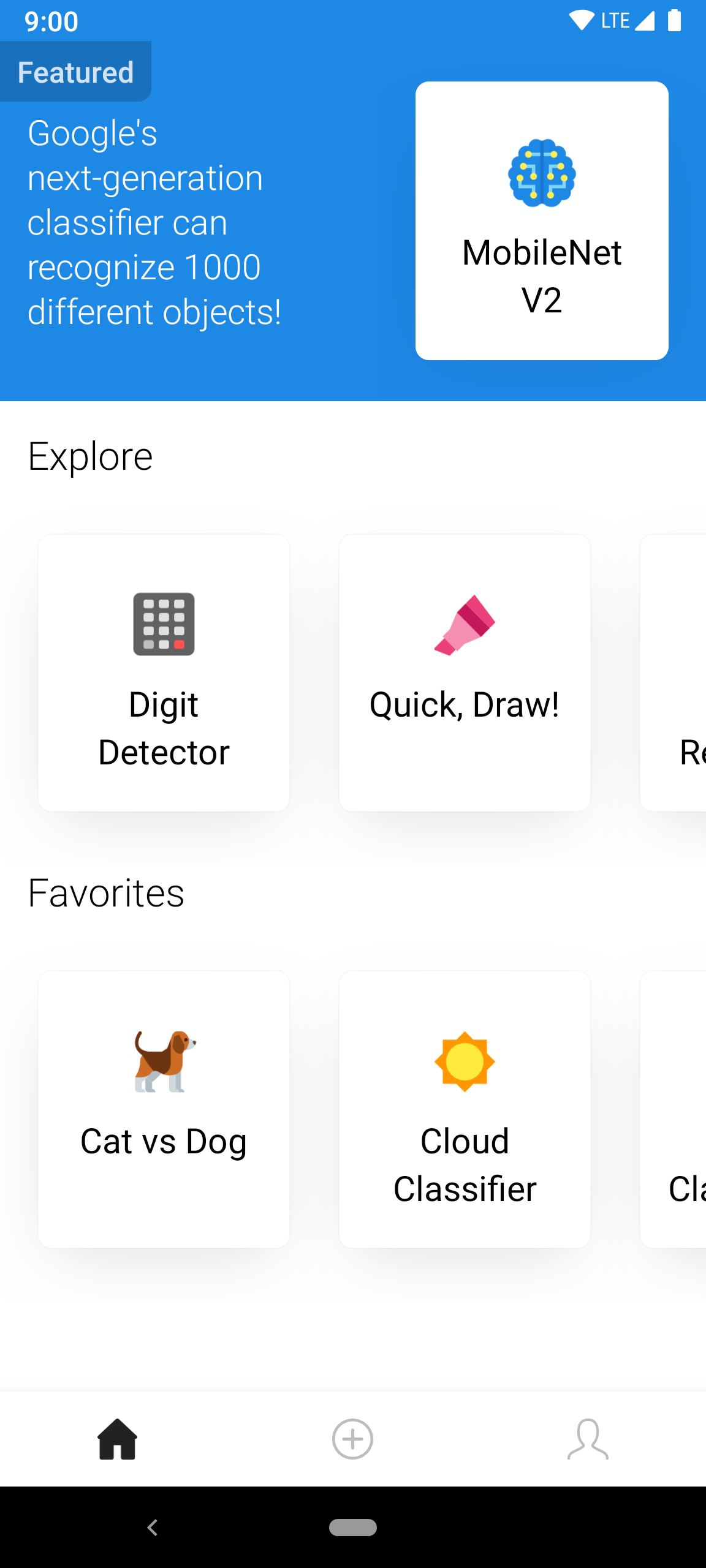

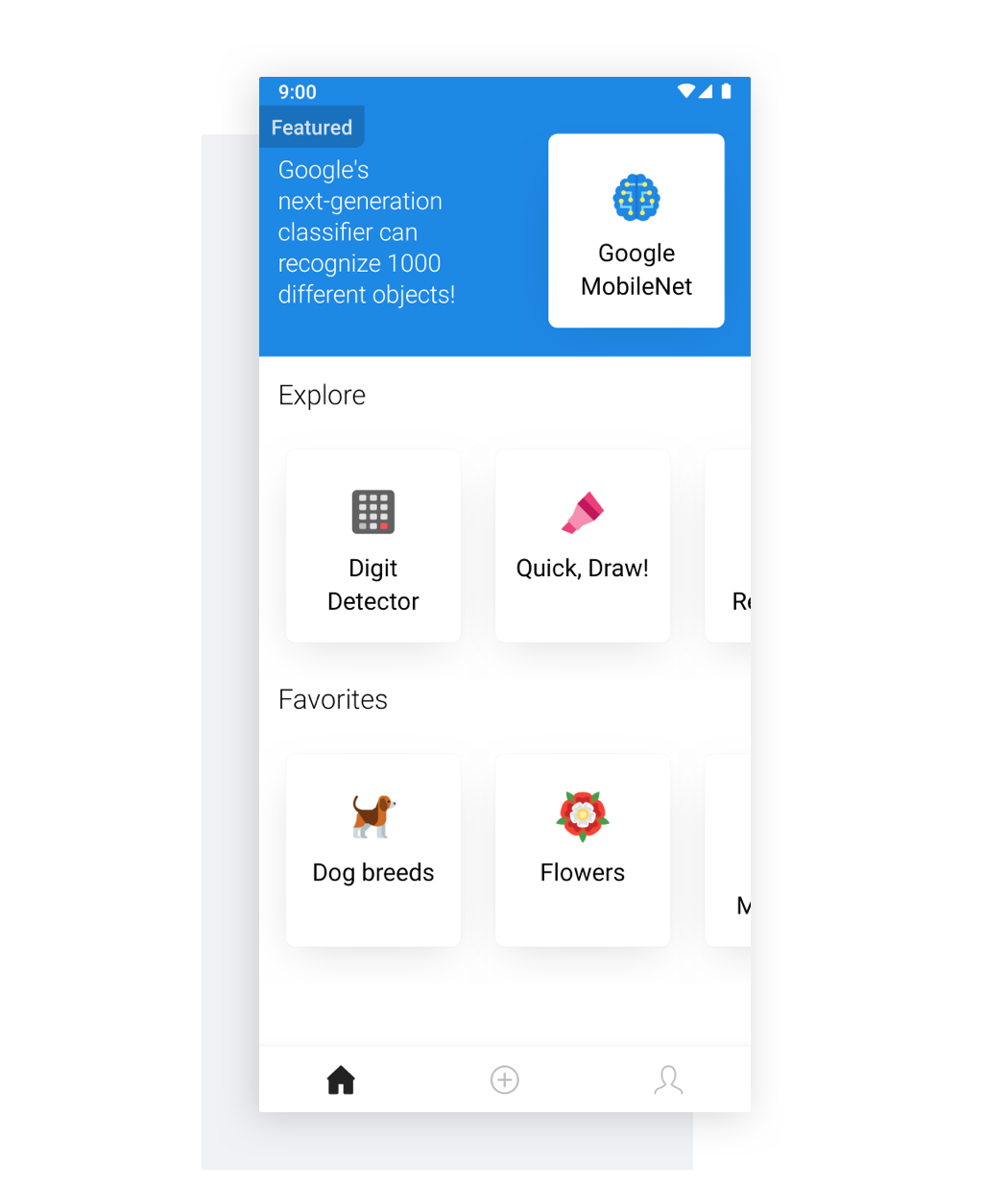

Access dozens of machine learning models, anytime, in one place right on your phone.

Pallet gives your image classification models a usable, shareable interface in seconds.

On-device inference keeps your data private & secure.

Evaluate your models with zero latency.

Make predictions in real-time, or save predictions for later review.

Tune preprocessing options to maximize accuracy.

Push unlimited updates. Public models see the latest version instantly.

Share your model with any number of friends and colleagues in one tap.

Stay up to date with the latest features and get notified when Pallet launches on more platforms: